Containerization with Docker became really popular and has allowed many applications to create light-weighted Dockerized infrastructures with a lot of features, such as fast code deployment.

Docker as is in its original architecture presumes that it's containers can connect to the outside network. Meanwhile, by default configuration, you are not able to access the containers from the outside.

Restricting access to Docker containers from the outside world is a good solution in terms of security, but may be problematic for some particular cases where you need access from outside, for example testing the application, website hosting, etc.

In this article, we will talk about docker commands which will make it possible to expose ports in docker containers and make them accessible from the outside network, and how to connect their ports to external ports in the host. Also, you are able to take a closer look at some useful docker commands at this article.

Port binding in Docker

Let's assume that we want to run NGINX in Docker container. What you can do is install NGINX and run a container, but what you can't do - is access this container from the outside.

By default - Docker containers are using an internal network and each Docker container is having it's own IP that is accessible from Docker Machine. The interesting thing is that internal IP's can't be used to access containers from outside, however, Docker Machine's primary IP is accessible from the external network.

Of course, you'll need to enable user access to the web server application from the internet. We usually bind Docker container 80 port to the host machine port, let's say 7777. Consequently, users will be able to access the web server 80 port using the host machine port 7777.

Are you tired of managing your Docker infrastructure? Our DevOps engineers will take care of your Docker infrastructure and make it working as Swiss watches.

Exposing Docker ports while creating Docker container

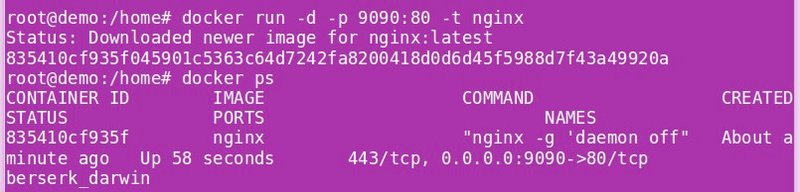

To expose Docker ports and bind them while starting a container with docker run you should use -p option with the following Docker commands:

docker run -d -p 9090:80 -t nginx

This will create NGINX container and bind it's internal 80 port to the Docker machines 9090.

Check it out with docker ps command:

Use docker inspect command to view port's bindings:

After that, the internal 80 port will be accessible using Docker machine IP on port 9090.

Expose multiple docker ports.

Now we know how to bind one container port to the host port, which is pretty useful. But in some particular cases (for example, in microservices application architecture) there is a need of setting up multiple Docker containers with a lot of dependencies and connections.

In such cases, it is conceivable to delineate the scope of ports in the docker host to a container port:

docker run -d -p 7000-8000:4000 web-app

It will bind 4000 container's port to a random port in a range 7000-8000. Include "EXPOSE" parameter in Dockerfile if you want to update Docker container listening ports:

EXPOSE <CONTAINER_PORT>

Exposing Docker port to a single host interface

There are also some particular cases when you need to expose the docker port to a single host interface, let's say - localhost. You can do this by mapping the port of the container to the host port at the particular interface:

docker run -p 127.0.0.1:$HOSTPORT:$CONTAINERPORT -t image

You can use docker port [id_of_the_container] command to verify the particular container port mapping:

Docker ports random exposure during a build time

Want to map any network port inside a container to a port in the Docker host randomly? - Use -P in docker run command:

docker run -d -P webapp

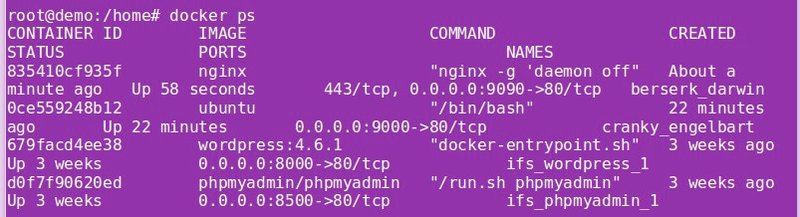

To verify if the port mapping for a particular container - use docker ps command after creating the container:

In addition

Security is the #1 priority in any online project, so you should not forget about special security measures. A good solution will be to create Firewall rules and configure them to block unauthorized access to containers.

If you have some problems with your Docker you can always hire our experts to take care of your containerized infrastructure - we'd be happy to collaborate with you!

Docker commands and Dockerfile usage for running containers on a local machine

Docker commands and Dockerfile usage for running containers on a local machine

Netflix tech stack for powering streaming backend and cloud solutions